When it comes to military embedded computing, basically only two microprocessor manufacturers slug it out for the lion’s share of the defense and aerospace embedded computer market for applications like radar processing—Freescale Semiconductor Inc. in Austin, Texas, and Intel Corp. of Santa Clara, Calif.

While Freescale has been this market’s 500-pound gorilla for some time, Intel’s latest introduction of its Core i7 microprocessor in January is tipping the defense embedded systems community on its head in a transformation the likes of which seasoned observers have not seen in years.

The newest Core i7 “is unlike anything we’ve got,” says Frank Willis, director of military and aerospace product development at GE Intelligent Platforms in Albuquerque, N.M. “It’s going to set the standard for performance as we move ahead. This gives Intel unbelievable capability and entry into the market.”

Sure, there are plenty of other important microprocessor makers—Advanced Micro Devices Inc. (AMD) of Sunnyvale, Calif.; ARM Inc. of Los Gatos, Calif.; Cavium Networks of Mountain View, Calif.; and MIPS Technologies Inc. of Mountain View, Calif. among them—but for military embedded applications, Freescale primarily, and to a lesser extent Intel, have been the processors of choice.

Of the leading two companies, Freescale by far has been the more dominant over the past several years based on its marquee embedded microprocessor, the Power PC (and later Power Architecture) with the AltiVec floating point and integer SIMD instruction set—for applications like radar, sonar, and signals intelligence that need floating-point processing.

The performance, flexibility, and power consumption of the Power Architecture has kept Freescale on the top of the mountain for a long time, and not just because of floating-point processing. The Power Architecture also fits well with the VME backplane databus that has dominated military embedded computing for much of the chip’s reign.

Aerospace and defense systems designers, despite getting what they needed from Freescale, always wanted an alternative source of microprocessors, just in case Freescale changed their microprocessor architectures.

For a while, defense and aerospace embedded computer makers hung their hopes on a company in Santa Clara, Calif., called P.A. Semi as an alternative to Freescale. P.A. Semi experts were developing a powerful and power-efficient Power Architecture processor called PWRficient, which met their needs. Hopes were dashed, however, when Apple Computer acquired P.A. Semi, and took P.A. Semi products off the open market.

Then the fears of high-end military systems designers were realized. Freescale, in a bid to dominate the cell phone and handheld appliance market, decided to abandon AltiVec and floating-point capability in its latest generation of microprocessors, which dropped a monkey wrench into long-term planning among the military embedded computing companies. “The Freescale PowerPC roadmap is dead-ended now at AltiVec,” says Doug Patterson, vice president of marketing at Aitech Defense Systems Inc. in Chatsworth, Calif.

Then Intel quietly made it known that help was on the way; the company was working on a high-performance, low-power chip with floating-point capability. Embedded computer designers made plans to take advantage.

Intel formally altered the balance in January 2010 with its announcement of the latest-generation Core i7 microprocessor with floating-point capability. Several embedded computing products aimed at aerospace and defense applications were introduced within hours of the Intel Core i7 introduction, with additional products coming out nearly every day afterwards.

“The biggest feature of the Core i7 is the floating-point performance,” says Ben Klam, vice president of engineering at Extreme Engineering Solutions (X-ES) in Middleton, Wis. “Now they are getting into lower-power embedded applications with good performance, which will help any military applications that benefit from floating point, like radar and signal processing.”

None of this is to say that Freescale will not be part of aerospace and defense applications in the future; far from it.

Steve Edwards, chief technology officer at Curtiss-Wright Controls Embedded Computing in Leesburg, Va., says the military and aerospace embedded computing market essentially has three components—high-end digital signal processing (DSP) applications; general-purpose processing; and low-power mobile applications.

Edwards says Intel may well come to dominate military DSP applications in the near term because of the Core i7’s floating-point capability, yet he sees continued vigorous competition between Intel and Freescale in general-purpose processing and low-power embedded computing applications.

General-purpose processing in aerospace and defense applications “is split between the Power Architecture and Intel,” Edwards says. “We see a huge market for the Freescale Power Architecture for highly integrated applications that need multiple cores, Ethernet controllers, and very small-footprint solutions.”

General-purpose processing applications moving into the Intel camp, meanwhile, favor Intel’s tie-in with the Windows desktop operating system, other commercial software with familiar man-machine interfaces, and embedded Linux, he says.

Low-power applications such as man-portable systems and small unmanned aerial vehicle (UAV) payloads also should remain a tossup between Intel and Freescale in the future, Edwards says, as both companies offer equivalent products.

In high-end DSP applications, however, aerospace and defense systems designers are coming out with distinct preferences for the new Intel microprocessor. “The bar for this capability has been set,” says GE’s Willis. “You will have to see Freescale step up to this level to stay competitive.”

Cyber Mania

Thursday, December 2, 2010

Monday, November 15, 2010

Device = “computer power” not computer

Sometimes with advances in technology, people assume the use patterns of a previous system on the new. This happened with cars - which were referred to as the horseless carriage (and we still talk about horse power in our cars today). It happened with films - which were originally shot only in the framing of the proscenium arch. (It took DW Griffith’s Birth of a Nation to shift to shots of close ups, jump cuts, and tracking.) And it’s happened again and again in the tech industry.

It makes sense why this happens. People look for patterns and always want to compare something new to something known. But understanding the true value of new technologies often requires breaking free from the paradigms of the past. Today’s Wi-Fi and internet enabled devices are an excellent example.

Today’s devices have more processing power than the Nasa computers that originally put men on the moon - but they are not computers. What a consumer wants from their handset or smartphone isn’t a computer. They don’t want to have to wait while it boots up, they don’t want to have to log in and enter passwords and they certainly don’t want to have maneuver through menus to get the data they desire or take the actions they want. So it’s clear what people don’t want. What they do want is dictated by the device. If you bought a smartphone, you want:

Today’s devices have more processing power than the Nasa computers that originally put men on the moon - but they are not computers. What a consumer wants from their handset or smartphone isn’t a computer. They don’t want to have to wait while it boots up, they don’t want to have to log in and enter passwords and they certainly don’t want to have maneuver through menus to get the data they desire or take the actions they want. So it’s clear what people don’t want. What they do want is dictated by the device. If you bought a smartphone, you want:

1. a phone

2. calendar

3. email

4. messaging

5. all other stuff (I put games, music - even a camera in this bucket - but this is a personal assessment)

Other devices are even easier. You buy a digital camera with Wi-Fi access - you want a decent camera first. Media player = I want media. Internet Radio - I love music. GPS = I don’t want to stop to ask for directions, so I seriously don’t want to stop and log in ;-). Because people want different things from their devices than they want from their laptops, we can expect different use patterns for how, and why and where these people access the internet from their devices.

It’s exactly this new paradigm of use that Dave Fraser recently spoke about with the keenly insightful journalist Byran Betts of Tech World.

Bryan’s article begins:

“Making sense of who does what in the wireless business can be tough work” but as the article goes on it begins exploring ways that devices are different from computers. Dave weighs in on this:

I think we need to let go of the old paradigm and allow these next generation devices to be simply - “service enabled” and empowered by the internet. This frees businesses and consumers to explore the new devices in an appropriate context and find the true values that these will afford. These devices are “computer-powered” but are not computers.

It makes sense why this happens. People look for patterns and always want to compare something new to something known. But understanding the true value of new technologies often requires breaking free from the paradigms of the past. Today’s Wi-Fi and internet enabled devices are an excellent example.

1. a phone

2. calendar

3. email

4. messaging

5. all other stuff (I put games, music - even a camera in this bucket - but this is a personal assessment)

Other devices are even easier. You buy a digital camera with Wi-Fi access - you want a decent camera first. Media player = I want media. Internet Radio - I love music. GPS = I don’t want to stop to ask for directions, so I seriously don’t want to stop and log in ;-). Because people want different things from their devices than they want from their laptops, we can expect different use patterns for how, and why and where these people access the internet from their devices.

It’s exactly this new paradigm of use that Dave Fraser recently spoke about with the keenly insightful journalist Byran Betts of Tech World.

Bryan’s article begins:

“Making sense of who does what in the wireless business can be tough work” but as the article goes on it begins exploring ways that devices are different from computers. Dave weighs in on this:

- “…Devices are not like PCs - they are more batch-orientated. There are some browsing devices, but most are more purposeful, and typically it’s a single-purpose device that gets the market share - think of movie players and games systems. “For example, your digital camera could send a photo to Flickr or your home PC. There’s a job to be done - the device wants to get on the network, do its thing, then get off again. Very few will want to stay on for long, that’s just for browsing or games.”

I think we need to let go of the old paradigm and allow these next generation devices to be simply - “service enabled” and empowered by the internet. This frees businesses and consumers to explore the new devices in an appropriate context and find the true values that these will afford. These devices are “computer-powered” but are not computers.

Mobile VoIP

As with all technology - its what it does for us and not what it is that matters. In an effort to focus on what it does, this month we will be examining mobile VoIP - or voice over Internet protocol. In this installment, we’ll look at what Mobile VoIP is and a provide a quick overview of the industry and environment. Future installments will review how you can use mobile VoIP on your devices.

What it is:

Mobile VoIP is simply VoIP access via a mobile device. The power of VoIP is that it allows inexpensive or even free calls to be made over the internet. Now with Mobile VoIP, you can take the freedom and flexibility of VoIP with you wherever you go. There are numerous VoIP clients for mobile devices including ones from Skype, fring, Gizmo and Truphone. There are many other VoIP providers, but all of these mentioned have clients for smartphones and/or Internet Tablets. Four technologies are required for mobile VoIP: a device, client software, a wireless network and a VoIP service.

Industry and Environment:

VoIP itself first came on the market in the early 1970’s (pre-history I know ;-). According to VoIP Monitor, revenue in the total VOIP industry in the US is set to grow by 24.3% in 2008 to $3.19 billion. Mobile VoIP is estimated to grow to US$12 billion by 2010 in Europe alone. Skype is perhaps the best known VoIP client and last year they reported half a billion downloads and this year they are approaching a billion downloads

Benefits of Mobile VoIP:

The big benefit is that VoIP saves you significant $ca-ching$. Costs saving come primarily by reducing or eliminating roaming rates and fees, as well as high fees for international calls. The best value for folks who live in the US (where most plans allow you to roam across the country) is using VoIP when traveling outside of the country or for making international calls. In Europe, where crossing a border can be minutes away and mean the difference between included coverage and roaming rates, mobile VoIP is even more popular.

In short, mobile VoIP is an easy, effective and inexpensive way to stay in touch when you are roaming or making international calls.

Blood camera to spot invisible stains at crime scenes

Call it CSI: Abracadabra. A camera that can make invisible substances reappear as if by magic could allow forensics teams to quickly scan a crime scene for blood stains without tampering with valuable evidence.

The prototype camera, developed by Stephen Morgan, Michael Myrick and colleagues at the University of South Carolina in Columbia, can detect blood stains even when the sample has been diluted to one part per 100.

At present, blood stains are detected using the chemical luminol, which is sprayed around the crime scene and reacts with the iron in any blood present to emit a blue glow that can be seen in the dark. However, luminol is toxic, can dilute blood samples to a level at which DNA is difficult to recover, and can smear blood spatter patterns that forensic experts use to help determine how the victim died. Luminol can also react with substances like bleach, rust, fizzy drink and coffee, causing it to produce false positives.

The camera, in contrast, can distinguish between blood and all four of these substances, and could be used to spot stains that require further chemical analysis without interfering with the sample.

To take an image of a scene, the camera beams pulses of infrared light onto a surface and detects the infrared that is reflected back off it. A transparent, 8-micrometre-thick layer of the protein albumin placed in front of the detector acts as a filter, making a dilute blood stain show up against its surroundings by filtering out wavelengths that aren't characteristic of blood proteins.

By modifying the chemical used for the filter, it should be possible to detect contrasts between a surface and any type of stain, says Morgan. "With the appropriate filter, it should be possible to detect [sweat and lipids] in fingerprints that are not visible to the naked eye," he says. "In the same way you could also detect drugs on a surface, or trace explosives.

Sunday, November 14, 2010

New printer produces 3D objects on demand

Imagine a machine which accepts CAD drawings, then produces a three dimensional prototype within a few hours for $100 - it now exists. The successful implementation of the technology points the way to this technology eventually finding its way into local bureau which produce while-you-wait samples as a service, and eventually to the home where designs could be downloaded from the internet and manifested at whim.

American Z Corporation now has several models of 3D printers that produce physical prototypes quickly, easily, and inexpensively from computer-aided design (CAD) and other digital data.

In the same way that conventional desktop printers provide computer users with a paper output of their documents, Z Corp.'s 3D printers provide 3D CAD users a physical prototype of real world objects such as a mobile phone, an engine manifold, or a camera.

The process operates in a remarkably similar fashion to the ink-jet printer, building layer upon layer of powder and a bonding agent which creates the object, and it can even be done in full colour.

Though only available for a short time, the machines have already found their way into the world's best known R&D studios - Sony, Fisher-Price, Adidas, Canon, Kodak, NASA, Harley Davidson, Lockheed Martin, Northrop Grumman, BMW, Porsche, Ford, DaimlerChrysler, Harvard, MIT and Yale.At the bottom end of the market, the most recent entry-level rapid prototyping system from Zcorp sells for around US$25,000 providing customers with an all-purpose solution for their modeling needs.

The ZPrinter System completes the Z Corp. 3D Printer product line, which includes the Z406 Full Color 3D Printer and the Z810 Large Format 3D Printer. Z Corporation offers a range of materials to support its 3D Printers, including the new ZCast powder, used to create molds for metal casting on the Z Corp. 3D Printing Systems.

Z Corp.'s revolutionary ZCast technology addresses the needs of the metal casting industry and end users who seek to rapidly produce metal prototype parts. The ZCast technology involves printing metal casting molds directly from digital data. The process drastically reduces the time it takes to produce a casting from weeks to days. In addition to the ZCast process, the Z Corp. technology can be used to create patterns for the sand casting or investment casting of metal parts.

American Z Corporation now has several models of 3D printers that produce physical prototypes quickly, easily, and inexpensively from computer-aided design (CAD) and other digital data.

In the same way that conventional desktop printers provide computer users with a paper output of their documents, Z Corp.'s 3D printers provide 3D CAD users a physical prototype of real world objects such as a mobile phone, an engine manifold, or a camera.

The process operates in a remarkably similar fashion to the ink-jet printer, building layer upon layer of powder and a bonding agent which creates the object, and it can even be done in full colour.

Though only available for a short time, the machines have already found their way into the world's best known R&D studios - Sony, Fisher-Price, Adidas, Canon, Kodak, NASA, Harley Davidson, Lockheed Martin, Northrop Grumman, BMW, Porsche, Ford, DaimlerChrysler, Harvard, MIT and Yale.At the bottom end of the market, the most recent entry-level rapid prototyping system from Zcorp sells for around US$25,000 providing customers with an all-purpose solution for their modeling needs.

The ZPrinter System completes the Z Corp. 3D Printer product line, which includes the Z406 Full Color 3D Printer and the Z810 Large Format 3D Printer. Z Corporation offers a range of materials to support its 3D Printers, including the new ZCast powder, used to create molds for metal casting on the Z Corp. 3D Printing Systems.

Z Corp.'s revolutionary ZCast technology addresses the needs of the metal casting industry and end users who seek to rapidly produce metal prototype parts. The ZCast technology involves printing metal casting molds directly from digital data. The process drastically reduces the time it takes to produce a casting from weeks to days. In addition to the ZCast process, the Z Corp. technology can be used to create patterns for the sand casting or investment casting of metal parts.

Types of Magnetic Storage Devices of a Computer

Hard Drive Storage Device

Hard drives are the most popular storage subsystem in the computers. Hard drives are responsible for storing persistent info on a computer and due to its persistency it has been considered as the most important computer storage system sold in the market.

Hard drives are the most popular storage subsystem in the computers. Hard drives are responsible for storing persistent info on a computer and due to its persistency it has been considered as the most important computer storage system sold in the market.

Magnetic Tape Storage Device

A magnetic tape storage device contains a plastic tape that is coated with magnetic material, which records characters in form of combinations of points on tracks parallel to longitudinal axis of tape. These tapes are sequential media. There is a problem with magnetic tapes because every time access to certain information becomes necessary to read all data that precedes it resulting in loss of time.

A magnetic tape storage device contains a plastic tape that is coated with magnetic material, which records characters in form of combinations of points on tracks parallel to longitudinal axis of tape. These tapes are sequential media. There is a problem with magnetic tapes because every time access to certain information becomes necessary to read all data that precedes it resulting in loss of time.

Magnetic tape storage device

Magnetic Drum Storage Device

Magnetic drum storage device contains either a hollow cylinder or a solid cylinder, which rotate at a consistent velocity. These cylinders are covered with magnetic material that is capable of retaining information, which is recorded and read by a head whose arm moves in the direction of the axis of rotation of the drum. In magnetic drum, access to information is direct and not in a sequential pattern like magnetic tapes.

Magnetic drum storage device contains either a hollow cylinder or a solid cylinder, which rotate at a consistent velocity. These cylinders are covered with magnetic material that is capable of retaining information, which is recorded and read by a head whose arm moves in the direction of the axis of rotation of the drum. In magnetic drum, access to information is direct and not in a sequential pattern like magnetic tapes.

Magnetic drum storage device by IBM

Floppy disk or Diskette Storage Device

A diskette or a floppy disk is a flexible plastic disk covered by magnetic material, which allows reading and recording of limited data stored on it. A floppy disk is thin and flexible enclosed in a rectangular or square thin shell of plastic. These disks are currently out of fashion. They are usually sized around 3½ to 5¼ inches and are still used in personal computers.

So above were the four types of magnetic storage devices of a computer system. In my next article, I will share some info on Six Types of Optical Storage Devices of a Computer, which will help you in understanding them before buying them.

A diskette or a floppy disk is a flexible plastic disk covered by magnetic material, which allows reading and recording of limited data stored on it. A floppy disk is thin and flexible enclosed in a rectangular or square thin shell of plastic. These disks are currently out of fashion. They are usually sized around 3½ to 5¼ inches and are still used in personal computers.

So above were the four types of magnetic storage devices of a computer system. In my next article, I will share some info on Six Types of Optical Storage Devices of a Computer, which will help you in understanding them before buying them.

Floppy disks

Saturday, November 13, 2010

AMD Phenom II Six Core 1090T V/s Intel Core i7 920

V/s

Eight months ago AMD introduced their 2nd generation of Phenom 2 X4 Processors that was designed to use the AM3 socket while remaining backwards compatible with previous AM2/AM2+ motherboards. Although the Phenom II X4 965 was AMD’s flagship desktop processor, it wasn’t the performance that glued users on to it but rather the value for money it brought to the table. Alongwith processors like X 2 550/555 which could be unlocked to 4 cores depending upon the combination of chip and motherboard.

Whether your allegiance lies with AMD or Intel, there is no denying the fact that competition is a good thing for us the consumers. Now you would ask, why should Intel affiniatos be happy about AMD’s hexa-core processor launch. Intel has a hexa-core Processor, the i7 980X aka gulftown but it retails for 1000$ and out of reach for majority of the enthusiast who are in their sane minds and without any roadmap for launching an affordable hexa-core CPU. We will find out in the next few pages whether the new hexa-core processor from AMD 1090T Black Edition pressurises the competition enough that Intel would be forced to do price cuts or maybe new product launches.

The new Phenom II X6 1090T Black Edition that we are benchmarking today operates at 3.2GHz with a 16x clock multiplier (200Mhz slower than the 965) but with a turbo speed of 3.6Ghz which should make non-overclockers very happy because when using single thread applications it means free performance. This new Phenom is based on the new new Thuban architecture and features 9MB L3 cache, with each core having its own dedicated 512KB of L2 cache (3MB total L2 cache).And before we forget its not just X6 1090T which is getting launched today but there are other processors as well. Do find the basic specs of the processors listed below.

1090T Featureset

Lets have a quick look at the die picture of the 1090T. We wont waste your time with blabbering on the conclusions drawn from the picture.

• True Six Core Processing

• AMD Turbo CORE Technology• L1 Cache: 128KB (64KB Instruction + 64KB Data) x6(six-core)

• L2 Cache: 512KB x6(six-core)

• L3 Cache: 6MB Shared L3

• 45-nanometer SOI (silicon-on-insulator) technology

• HyperTransport™ 3.0 16-bit/16-bit link at up to 4000MT/s full duplex; or up to 16.0GB/s I/O bandwidth

• Up to 21GB/sec dual channel memory bandwidth

• Support for unregistered DIMMs up to PC2 8500 (DDR2-1066MHz) and PC3 10600

• Direct Connect Architecture

• AMD Balanced Smart Cache

• AMD Dedicated Multi-cache

• AMD Virtualization™ (AMD-V™)Technology

• AMD PowerNow™ 3.0 Technology

• AMD Dynamic Power Management

• Multi-Point Thermal Control

• AMD CoolCore™ Technology

In AMD’s words – “AMD Phenom™ II X6 Processors were designed for extreme megatasking, multi-threaded applications, and entertainment. To enable you to do more than you’ve ever imagined: create, edit, render, encode/decode and transfer dense HD content while watching HD content, burning CDs or DVDs while downloading music and video.” We certainly will see in the upcoming pages if they have been successful in their endeavor or not. But first lets look at the difference between the various new chipsets AMD has come out with.

Unluckily todays benchmarks were carried out on a 890GX chipset and not the 890FX chipset which is supposed to give the best overclocking results.

And before we move on just so that you get to know a bit more about the 890FX chipset, do check the architecture of 890FX below.

System Specs and Benchmark List

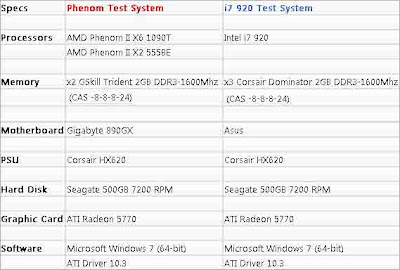

Due to shortage of time, there is only a limited amount of setups that we have for comparison. But I guess this should be enough to see where does 1090T stands when compared to its existing product line and the target competition.

We would be comparing the setups with below mentioned benchmarks

Multithreaded 2d Benchmarks

Wprime

Everest CPU Queen

Winrar

Main Concept Encoding

PCMark Vantage

Memory Bandwidth Benchmark

Sisoft Sandra

3d Synthetic Benchmarks

3dMark06

3dMarkVantage

Cinebench

Games

Crysis Warhead

FarCry 2

Turbo CORE

Lets talk about the new feature in the x6 series of CPU, in my understanding Turbo CORE is something which plays with CPU’s TDP headroom when three or more cores are idle by automatically boosting the remaining three cores and throttling down the idle cores. On the Phenom II X6 1090T, 3.2 GHz CPU it can boost the CPU up to 400Mhz and put the final speed to 3.6Ghz. Quite impressive if you ask me.

Unfortunately it seems to me this is simply a marketing gimmick or fail engineering. I tried running a couple of single threaded programs like super-pi and pi-fast at stock speeds of 3.2Ghz but at no point of time any of the cores jumped to 3.6Ghz. At the max one of the cores jumped over to 3.4Ghz only to fall back on 3.2Ghz and at no point of time did any of the cores throttle down.

Though I should also add, that I was not using a 890FX board but I dont think Turbo Core is chipset restricted or atleast it simply should not be. On another thought it might be bios dependent and needs a motherboard bios update??? We will wait for the verdict to come out on this in the next couple of weeks.

Overclocking

This is the section which excites me the most, afterall I write reviews so that I can have access to the latest hardware(whoops!!! spilled the secret out). Lets then push it to the limits, ofcourse only on air. But there is more to come, LN2 results should be out in a week or two.

Before we begin let me tell you that the sample we have is a retail unit so though we would expect other processors to reach to the same level or better but many factors come into play when overclocking. First off we tried with the stock cooler which has seen some improvement from the 965’s stock cooler. Hold on to your breath, we were able to reach speeds of upto 4.4Ghz which was only 2d stable though. 4.2Ghz was 3d stable on stock cooler under an airconditioned room. Hugely impressed with the initial overclock on the stock cooler we slapped on a sample of CoolerMaster V10 cooler that we had. Though temperatures decreased but our overclock didnt improve. So next was disabling cores (remember this wont give much performance boost to most users, but can be done just to get maybe a second faster time in superpi). With the Gigabyte motherboard we had we were able to disable all but 2 of the cores from Bios. And with vCore pushed to 1.6V we were able to achieve 4.6Ghz which was stable enough to run superpi. Afterall things said and done, now the reviewer can go to sleep in peace.Our retail review sample was able to reach 4.6Ghz with only 2 cores and 1.6V, but for 24/7 setup we were able to achieve 4.2Ghz at 1.55V. This is certainly good news since in our opinion 1090T would have a higher overclocking headroom than the 965BE processors out there judging by our retail review sample while adding 2 extra cores to it.

Price and Conclusion

1090T and 1055T are the first real hexa-cores which is in reach of 99% enthusiasts, since Intel’s 980X retails for around 1000$(more than Rs. 55000 in Indian retail market), though if you are looking at purely performance numbers then I am sure 980X has simply no competition. We will have a 980X as well for comparison very soon, so stay glued to Erodov, :p. As of now 1090T is selling for around Rs. 15000 and 1055T for Rs. 10100 in India.

Though 1090T is impressive but which group of enthusiast should really be looking forward to upgrade?. I will say anyone involved in video work, photoshop, rendering would be my first target group. AMD’s new flagship processor is easily able to keep up with the Intel’s 920 and in some test better it. Gamers I would say would not really see much benefit from either going to Intel or AMD’s hexacore just now. There is really no benefit of having extra cores when they are not being used for gaming. If you are a gamer and want more performance out of your C2D or Phenom 2 computer then will suggest a SSD as an upgrade for sure, specially if you play multiplayer games. Ofcourse if you are a overclocker at heart and would like to buy a future proof system for the next 2-3 years then 1090T has plenty to offer. Our system was able to do 4.6Ghz for a very small fraction of time for some 2d benchmarking, 4.4Ghz for benchmarking all cores with 2d benchmarking softwares like wprime, and 4.2Ghz for all our benchmarking suite. It will be interesting to see how much it can do under Liquid Nitrogen which we will be doing most probably this weekend. We have been told that these hexacore’s better overclocking headroom is mostly because of GlobalFoundrie’s addition of low-k dielectric in their 45nm manufacturing process, because of which these chips leaks less current, drawing less power and outputting less heat. Just uptill a few months ago, AMD had a 140W quad core and today they are delivering an hexacore within a 125W power envelope. Thats quite a feat if you ask me.In the end, we are at a crossroad where 1090T has brought AMD much closer to Intel than they have ever been in recent times. Its pretty hard to clearly announce a winner and to recommend one company over the other but whichever company you choose to build your rendering/gaming/enthusiast system, you’re probably not going to regret it.

Subscribe to:

Comments (Atom)